When your desires warp reality (and how to fight back).

Summary of the 3rd conference of Gerard Bronner at La Sorbonne on "developping critical thinking" , delivered in April 2025

"The human understanding when it has once adopted an opinion (either as being the received opinion or as being agreeable to itself) draws all things else to support and agree with it. And though there be a greater number and weight of instances to be found on the other side, yet these it either neglects and despises, or else by some distinction sets aside and rejects, in order that by this great and pernicious predetermination the authority of its former conclusions may remain inviolate." Francis Bacon, Aphorism 46

Like many others, Gérard Bronner is trying to make his own contribution to society on a subject that has deeply concerned him for at least 15 years. The hope with his Sorbonne lectures is that his materials, ideas and proposals will be widely shared, because the more people are able to step back from their own thought processes, the better our chances of resisting the traps of collective irrationality.

The troubling reality: Our belief system protects itself against contradiction and lets itself be influenced by our desires. We can believe things simply because they align with what we want to believe... and that's obviously not the same as believing what's actually true!

The Disturbing Ease of Self-Manipulation

The "Carcinogenic" Coffee Experiment (Deutsch)

Accomplices spread the rumor in a company that coffee is carcinogenic (false information). After six weeks:

30% of heavy consumers (3 cups/day) believe this information

90% of light consumers (1 cup or less) believe it

The mechanism: If I drink a lot of coffee, it's not in my interest for this information to be true, so I'll tend to reject the information received. Our interests contaminate our beliefs.

The Two Hearts Experiment (Tversky & Quattrone)

Researchers explain there's a "good" and "bad" type of heart, then have participants plunge their hands in ice water to "test" which they have. Depending on what they want to believe is true, everyone's performance explodes.

The troubling lesson: We're even capable of rigging reality to make it "bend" to our desires! At least temporarily.

Cognitive Dissonance and Motivational Bias: The Sect That "Saved" the World

Festinger's principle: When faced with a contradiction that threatens our belief system, we defend it, even by rewriting reality! In the battle you're about to wage against reality, expect to lose. Unless you can reinterpret reality thanks to the plasticity of belief...

The True Story of Marian Keech (1950s)

She predicts the end of the world on a specific date and announces that her group will be saved by flying saucers. Psychologist Festinger infiltrates the group to observe their behavior when the prophecy (inevitably!) fails.

The moment of truth: Midnight passes, no saucers, no catastrophe. A group member says: "I've burned all my bridges, I have no choice, it has to be true."

The reality flip: At 6 AM, faced with the prophecy's failure, Marianne finds THE solution: "We prayed so hard that we saved the world!" And suddenly, the group members, who had been very discreet until then, start proselytizing.

The lesson: When beliefs become entangled with a person's self-image, they'll defend them at all costs. These are motivational biases - beliefs based not on arguments, but on our interests.

Confirmation Bias: Why We'd Rather Be Right Than Correct

The basic mechanism: Our minds move more easily with affirmatives than negatives. When we want to test a statement, we generally look for examples that confirm the rule rather than trying to disprove it.

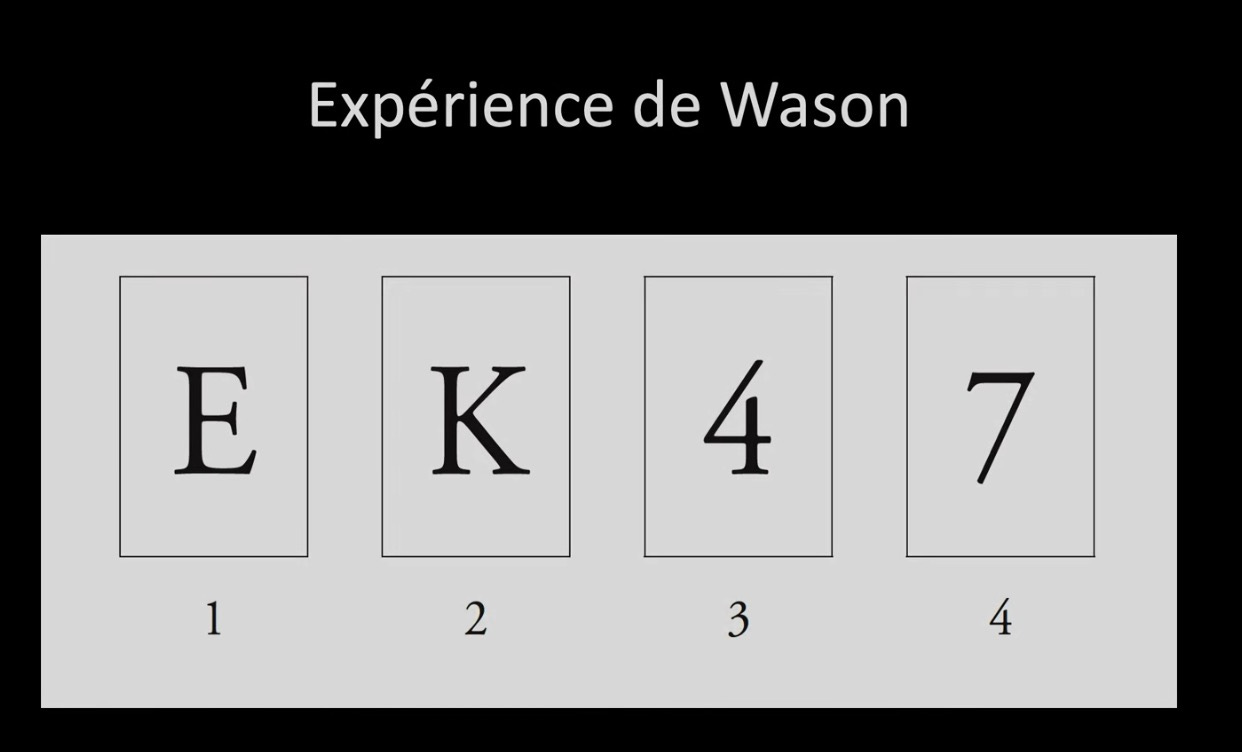

Wason's Four-Card Experiment (1966)

The rule to test: "If a card has a vowel on one side, it has an even number on the other" The classic mistake: People propose turning over the card with an even number, but even if it reveals a consonant, it neither confirms nor disproves the rule. The right answer: You need to turn over the card with an odd number - if it reveals a vowel, you've disproved the rule.

Why it's hard: Disproving a rule isn't "natural"... confirming it is!

Epidemics of Observation, Not Anomalies

The Seattle Windshield Affair (1950s)

The rumor: Windshields are cracking due to nuclear fallout. Eisenhower's investigation: Discovery that ALL windshields have micro-cracks everywhere. Verdict: It wasn't an epidemic of damaged windshields, but an epidemic of examined windshields.

The Saint-Cloud Antenna and the Nocebo Effect

During the installation of a 3G antenna, residents develop headaches, insomnia, metallic taste... until they discover the antenna wasn't even turned on yet.

The nocebo effect: Harmful effects generated psychologically by believing in the action of a totally inert object, amplified by confirmation bias.

The Barnum Effect and the Fake Psychic Experiment

The setup: 160 people from a psychic fair receive identical vague predictions (taken from an old TV magazine).

Spectacular results:

80% of strong believers: fully satisfied

37% of weak believers: satisfied

The mechanism: Ambiguous predictions + desire to believe = subjective confirmation (Barnum effect). The Barnum effect is an expression of confirmation bias that allows people to find a narrative around their lives.

Availability Bias and Optimism Bias

Tversky and Kahneman's definition: The tendency to estimate probability based on how easily we can find examples illustrating the event.

Real-world applications:

In couples: When you ask each partner what percentage of household chores they do, the total always exceeds 100%. We remember what we do better than what others do.

Among believers: Someone who believes in psychic readings particularly remembers the "confirmations" and sincerely declares: "I empirically observed that it worked."

The associated optimism bias:

70% of drivers think they drive better than average

90% of university professors believe they're better than their colleagues

70% of people think they're more humble than average!

Why it works: With homeopathy or pseudo-medicines, you eventually heal naturally (colds), you remember the successes and find excuses for failures ("I took it wrong").

Three Practical Antidotes

1. Example Exhaustion

Ask for 12 examples that demonstrate a belief. Finding a few examples is easy, finding many is harder. This helps realize that what we've erected as "law" rests on few experiences.

2. Question Your Preferences (Pre-bunking)

Before getting informed, ask yourself: "What do I want to be true?" This verbalization makes you aware of the potential trap.

3. Diversify Your Sources

Don't get trapped in epistemic bubbles. Maintain intellectual competition - it's what best prevents radicalization.

In the myth of Phaeton, Phaeton wants to prove his descent and asks his father Helios to drive his carriage (the solar carriage). But he cant master that carriage and after breaking havoc in the skies and on the earth (burning large chunks of it), he eventually falls from his carriage and meets his death. Sometimes wishful thinking can have dramatic circumstances.